Unit 2

1) How divide and conquer approach work?

The divide and conquer approach is a fundamental algorithm design paradigm used to solve complex problems by breaking them down into smaller, more manageable subproblems. Here’s how it works:

Steps of Divide and Conquer

-

Divide:

- The problem is divided into several smaller subproblems that are similar to the original problem but smaller in size.

- This division continues recursively until the subproblems become simple enough to be solved directly.

-

Conquer:

- Each of the smaller subproblems is solved independently. If the subproblems are still too large, the divide and conquer process is applied recursively.

- This step often involves calling the same algorithm on the smaller subproblems.

-

Combine:

- Once the subproblems have been solved, their solutions are combined to form a solution to the original problem.

- This step is crucial as it integrates the results from the subproblems to produce a final result.

Characteristics of Divide and Conquer

- Recursive Nature: The approach is inherently recursive, where each level of recursion breaks the problem down further.

- Efficiency: It often leads to efficient algorithms, especially for problems that exhibit overlapping subproblems and optimal substructure properties.

- Problem-Specific: The effectiveness of this approach depends on the nature of the problem being solved.

Examples of Divide and Conquer Algorithms

-

Merge Sort:

- Divide: Split the array into two halves.

- Conquer: Recursively sort both halves.

- Combine: Merge the two sorted halves.

-

Quick Sort:

- Divide: Choose a pivot element and partition the array around it.

- Conquer: Recursively sort the left and right partitions.

- Combine: Since partitions are sorted in place, no explicit combining step is needed.

-

Binary Search:

- Divide: Compare the target value to the middle element of a sorted array.

- Conquer: If they are not equal, recursively search in either the left or right half based on whether the target is less than or greater than the middle element.

- Combine: No explicit combining step; return results from recursive calls.

Conclusion

The divide and conquer approach effectively reduces complex problems into simpler ones, allowing for efficient solutions through recursive processing and combination of results. It is widely applicable in various fields of computer science, particularly in sorting, searching, and optimization algorithms.

2) What is Divide and Conquer Technique? Explain how to apply divide and conquer technique for solving the problem?

Divide and Conquer Technique

Definition: The divide and conquer technique is a fundamental algorithm design paradigm used to solve complex problems by breaking them down into smaller, more manageable subproblems. This approach involves three main steps: dividing the problem into smaller parts, conquering (solving) those parts recursively, and then combining the solutions to form a complete solution to the original problem.

Steps to Apply Divide and Conquer Technique

-

Divide:

- Split the original problem into several smaller subproblems that are similar to the original problem but smaller in size. This division continues recursively until the subproblems become simple enough to be solved directly.

-

Conquer:

- Solve each of the smaller subproblems independently. If any of these subproblems are still too large, apply the divide and conquer technique recursively on those subproblems as well.

-

Combine:

- Once the subproblems have been solved, combine their solutions to form a solution for the original problem. This step integrates the results from the subproblems.

Example of Applying Divide and Conquer

Let’s illustrate how to apply the divide and conquer technique using the Merge Sort algorithm as an example.

Problem: Sorting an Array

-

Divide:

- Given an array, split it into two halves.

- Continue splitting each half until you have subarrays that contain only one element (since a single element is inherently sorted).

-

Conquer:

- Recursively sort both halves of the array.

- Since you are now dealing with smaller arrays, this step will eventually reach the base case where each array has one element.

-

Combine:

- Merge the two sorted halves back together into a single sorted array.

- During this merging process, compare elements from both halves and arrange them in sorted order.

Example Walkthrough of Merge Sort

Consider an array: [38, 27, 43, 3, 9, 82, 10].

-

Divide:

- Split into

[38, 27, 43]and[3, 9, 82, 10]. - Further split

[38, 27, 43]intoand `[27, 43]`, then split `[27, 43]` intoand “. - Continue until you have individual elements:

,,,,,, “.

- Split into

-

Conquer:

- Each individual element is sorted by default.

- Now merge back:

- Merge

andto get[27, 43]. - Merge “with

[27, 43]to get[27, 38, 43]. - Move on to the right half: merge

,, and then merge with “, resulting in[3, 9, 10, 82].

- Merge

-

Combine:

- Finally merge the two sorted halves:

- Merge

[27, 38, 43]with[3, 9, 10, 82]to get a fully sorted array:

[3, 9, 10, 27, 38, 43, 82] - Merge

- Finally merge the two sorted halves:

Conclusion

The divide and conquer technique is a powerful method for solving problems efficiently by breaking them down into simpler components. This approach not only simplifies complex problems but also leads to efficient algorithms like Merge Sort and Quick Sort. By applying this technique systematically—dividing into smaller problems, solving each independently, and combining results—many computational challenges can be tackled effectively.

3) What do you mean by Divide & Conquer Approach? List advantages and disadvantages of it.

Divide and Conquer Approach

Definition: The divide and conquer approach is a fundamental algorithm design paradigm that involves breaking a problem down into smaller, more manageable subproblems, solving each subproblem independently, and then combining their solutions to solve the original problem. This technique is particularly effective for problems that can be recursively divided into similar subproblems.

Steps of the Divide and Conquer Approach

- Divide: Split the original problem into smaller subproblems.

- Conquer: Recursively solve each subproblem.

- Combine: Merge the solutions of the subproblems to create a solution for the original problem.

Advantages of Divide and Conquer

- Efficiency: Many divide and conquer algorithms, such as Merge Sort and Quick Sort, have efficient time complexities (e.g., ), making them suitable for large datasets.

- Simplicity: The recursive nature of divide and conquer can simplify the implementation of complex algorithms, making them easier to understand and maintain.

- Parallelism: Subproblems can often be solved independently, allowing for parallel processing and potentially improving performance on multi-core systems.

- Optimal Solutions: For many problems, divide and conquer guarantees an optimal solution by ensuring that all possible configurations are considered through recursive exploration.

Disadvantages of Divide and Conquer

- Overhead: The recursive calls can introduce overhead due to function calls and stack space usage, which may lead to inefficiencies in certain cases.

- Complexity in Implementation: While the concept is simple, implementing a divide and conquer algorithm can be complex, especially when combining solutions from subproblems.

- Memory Usage: Recursive algorithms may use more memory due to the call stack, which can be a limitation for deep recursions or large datasets.

- Not Always Applicable: Some problems do not lend themselves well to this approach and may be better suited for other algorithmic strategies (e.g., dynamic programming).

Conclusion

The divide and conquer approach is a powerful technique in algorithm design that effectively breaks down complex problems into simpler components. While it offers significant advantages in terms of efficiency and simplicity, it also comes with challenges related to overhead, implementation complexity, and memory usage. Understanding when to apply this approach is crucial for developing efficient algorithms.

4) Write iterative and recursive algorithm for finding the factorial of N. Derive the time complexity of both algorithms.

Factorial of N

The factorial of a non-negative integer is the product of all positive integers less than or equal to . It is denoted as and defined as:

Iterative Algorithm for Factorial

The iterative approach uses a loop to calculate the factorial.

Iterative Algorithm

def factorial_iterative(N):

result = 1

for i in range(1, N + 1):

result *= i

return resultRecursive Algorithm for Factorial

The recursive approach calls the function itself to calculate the factorial.

Recursive Algorithm

def factorial_recursive(N):

if N == 0:

return 1

else:

return N * factorial_recursive(N - 1)Time Complexity Analysis

Iterative Algorithm Time Complexity

- The iterative algorithm runs a loop from 1 to , performing a constant amount of work (multiplication) in each iteration.

- Time Complexity:

Recursive Algorithm Time Complexity

- The recursive algorithm makes a call to itself times, reducing by 1 each time until it reaches the base case (when ).

- Each recursive call involves a constant amount of work (multiplication).

- Time Complexity:

Summary

Both the iterative and recursive algorithms for calculating the factorial of have a time complexity of . However, the iterative method is generally more space-efficient because it does not involve the overhead of recursive function calls, which can lead to stack overflow for large values of . The recursive method, while elegant and straightforward, may consume more memory due to the call stack.

5) Give the recursive algorithm to find Fibonacci sequence. Comment on the complexity of the algorithm.

Recursive Algorithm to Find Fibonacci Sequence

The Fibonacci sequence is defined as follows:

- For :

Here’s the recursive algorithm to compute the -th Fibonacci number:

Recursive Algorithm

def fibonacci_recursive(n):

if n == 0:

return 0

elif n == 1:

return 1

else:

return fibonacci_recursive(n - 1) + fibonacci_recursive(n - 2)Complexity Analysis

Time Complexity

- The time complexity of the recursive Fibonacci algorithm is exponential. Specifically, it can be expressed as .

- This is because each call to

fibonacci_recursiveresults in two further calls (except for the base cases), leading to a binary tree of calls. - The number of calls grows exponentially with , as each Fibonacci number requires the computation of its two predecessors.

Space Complexity

- The space complexity is due to the recursion stack. In the worst case, the maximum depth of the recursion will be , as the function will call itself until it reaches the base cases.

Conclusion

While the recursive approach to calculating Fibonacci numbers is straightforward and elegant, it is inefficient for large values of due to its exponential time complexity. For practical applications, iterative methods or dynamic programming approaches (such as memoization) are preferred to improve efficiency.

6) What is recurrence? Solve recurrence equation T (n) = T (n-1) + n using forward substitution and backward substitution method.

What is Recurrence?

In computer science, a recurrence is an equation that recursively defines a sequence of values. It expresses the value of a function in terms of its values at smaller inputs. Recurrences are commonly used to analyze the time complexity of recursive algorithms.

Solving the Recurrence Equation

We will solve the recurrence equation using both forward substitution and backward substitution methods.

Forward Substitution Method

-

Unroll the Recurrence:

- Start from :

- Substitute :

- Substitute back into the first equation:

- Continuing this process:

-

Identify the Base Case:

- Assume , then :

- The sum of the first integers is given by:

-

Final Expression:

- Therefore, we have:

- Assuming , where is a constant:

-

Asymptotic Behavior:

- The dominant term as :

Backward Substitution Method

-

Express in Terms of Base Case:

- Start from :

- We know that:

- Continuing this way, we can express:

- …

- Up to :

-

Sum of First N Integers:

- The sum can be expressed as:

-

Final Expression:

- Thus, we have:

-

Asymptotic Behavior:

- The dominant term as :

Conclusion

Both the forward substitution and backward substitution methods yield the same result for the recurrence relation . The solution is:

Thus, the time complexity of the recurrence is:

7) Explain master Method and solve the following recurrence equation withmaster method.

a. T(n)= 9T(n/3) + n

b. T(n)= 3T(n/4) + nlgn

c. T(n)= T(n/2) + 1

d. T(n)= 7T(n/3) + n2

Master Method

The Master Method provides a way to analyze the time complexity of divide-and-conquer algorithms that fit a specific form of recurrence relations. It is particularly useful for solving recurrences of the type:

where:

- is the size of the problem,

- is the number of subproblems,

- is the factor by which the problem size is reduced,

- is a function that describes the cost of the work done outside the recursive calls.

The Master Theorem states that we can compare with to determine :

-

Case 1: If is polynomially smaller than , i.e., there exists a constant such that:

Then:

-

Case 2: If is asymptotically equal to , i.e., there exists a constant such that:

for some , then:

-

Case 3: If is polynomially larger than , i.e., there exists a constant such that:

and if for some constant , then:

Solving Recurrence Equations Using the Master Method

a.

- Here, .

- Calculate .

Comparison:

- Since , where , we fall into Case 1.

Conclusion:

b.

- Here, .

- Calculate .

Comparison:

- Since , we check if it satisfies the regularity condition.

To check regularity condition:

- Check if: Which simplifies to: This holds true for large enough constants.

Conclusion: Using Case 3, we have:

c.

- Here,

- Calculate:

Comparison:

- Since , we fall into Case 2.

Conclusion:

d.

- Here,

- Calculate:

Comparison:

- We see that since , we check if it satisfies regularity condition.

To check regularity condition:

- Check if: This simplifies to checking if: This holds true for large enough constants.

Conclusion: Using Case 3, we have:

Summary

The Master Method provides an efficient way to analyze the time complexity of divide-and-conquer recurrences. The solutions for the given recurrences are:

a.

b.

c.

d.

8) Which searching algorithm required Sorted list of elements?

The searching algorithm that requires a sorted list of elements is Binary Search.

Explanation of Binary Search

Binary Search works by repeatedly dividing the sorted list in half to locate a target value. The algorithm begins by comparing the target value to the middle element of the list:

- If the target value is equal to the middle element, the search is successful.

- If the target value is less than the middle element, the search continues in the left half of the list.

- If the target value is greater than the middle element, the search continues in the right half of the list.

This process is repeated until the target value is found or the sublist size becomes zero.

Time Complexity

- The time complexity of Binary Search is , making it very efficient for searching in sorted lists compared to linear search algorithms, which have a time complexity of .

Conclusion

In summary, Binary Search is a highly efficient searching algorithm that requires a sorted list of elements to function correctly.

9) Explain the use of Divide and Conquer Technique for Binary Search Method.Give the algorithm for Binary Search Method. What is the complexity of Binary Search Method?

Divide and Conquer Technique in Binary Search

Divide and Conquer is a powerful algorithmic paradigm that involves breaking a problem down into smaller subproblems, solving each of those subproblems independently, and then combining their solutions to solve the original problem. The Binary Search algorithm is a classic example of this technique applied to searching in a sorted array.

How Binary Search Uses Divide and Conquer

- Divide: The algorithm starts by dividing the sorted array into two halves. It calculates the middle index of the array.

- Conquer: It compares the target value with the middle element:

- If the target value is equal to the middle element, the search is successful.

- If the target value is less than the middle element, the search continues in the left half of the array.

- If the target value is greater than the middle element, the search continues in the right half of the array.

- Combine: Since each recursive call operates independently on its respective half of the array, there is no need for a combining step as in other divide and conquer algorithms.

Algorithm for Binary Search

Here’s a simple implementation of Binary Search using both iterative and recursive approaches:

Iterative Approach

def binary_search_iterative(arr, target):

left, right = 0, len(arr) - 1

while left <= right:

mid = left + (right - left) // 2

# Check if target is present at mid

if arr[mid] == target:

return mid

# If target is greater, ignore left half

elif arr[mid] < target:

left = mid + 1

# If target is smaller, ignore right half

else:

right = mid - 1

return -1 # Target not foundRecursive Approach

def binary_search_recursive(arr, target, left, right):

if left > right:

return -1 # Target not found

mid = left + (right - left) // 2

# Check if target is present at mid

if arr[mid] == target:

return mid

# If target is greater, search in right half

elif arr[mid] < target:

return binary_search_recursive(arr, target, mid + 1, right)

# If target is smaller, search in left half

else:

return binary_search_recursive(arr, target, left, mid - 1)Complexity of Binary Search Method

- Time Complexity: The time complexity of Binary Search is , where is the number of elements in the array. This logarithmic complexity arises because each comparison effectively halves the size of the search space.

- Space Complexity:

- For the iterative approach, the space complexity is since it uses a constant amount of space.

- For the recursive approach, the space complexity is due to the recursive call stack.

Conclusion

The Divide and Conquer technique effectively optimizes search operations by reducing the problem size with each step in Binary Search. This results in a highly efficient algorithm for finding elements in sorted arrays with a time complexity of .

10) Analyze quick sort algorithm for best case, average case and worst casewith example. In which case it performs similar to selection sort?

Quick Sort Algorithm Analysis

Quick Sort is a popular sorting algorithm that uses the divide-and-conquer approach to sort elements. It works by selecting a “pivot” element from the array and partitioning the other elements into two sub-arrays according to whether they are less than or greater than the pivot. The sub-arrays are then sorted recursively.

Time Complexity Analysis

-

Best Case:

- The best-case scenario occurs when the pivot divides the array into two nearly equal halves at each level of recursion. This results in a balanced partitioning.

- Time Complexity:

- Example: For an already sorted array where the pivot is chosen as the median:

- Array:

[1, 2, 3, 4, 5]with pivot3. - Partitioning results in two sub-arrays:

[1, 2]and[4, 5].

- Array:

-

Average Case:

- On average, Quick Sort also achieves a time complexity of . This is due to the random nature of pivot selection leading to reasonably balanced partitions.

- Example: For an array like

[3, 6, 8, 10, 1, 2, 1], if6is chosen as the pivot:- Partitioning results in

[3, 1, 2, 1]and[8, 10], leading to further recursive calls.

- Partitioning results in

-

Worst Case:

- The worst-case scenario occurs when the pivot is always the smallest or largest element in the partitioned array. This leads to unbalanced partitions and results in recursive calls with elements remaining each time.

- Time Complexity:

- Example: For an already sorted array where the smallest or largest element is consistently chosen as the pivot:

- Array:

[1, 2, 3, 4, 5]with pivot1. - This results in partitions like

[]and[2, 3, 4, 5], leading to inefficient sorting.

- Array:

Comparison with Selection Sort

Quick Sort performs similarly to Selection Sort in its worst-case scenario. Both algorithms exhibit a time complexity of when dealing with certain types of input:

- Selection Sort always has a time complexity of regardless of input because it always scans through the entire unsorted portion of the array to find the minimum element for each position.

- Quick Sort’s worst-case performance occurs under similar conditions (e.g., already sorted data with poor pivot selection), leading to inefficient partitioning.

Conclusion

In summary:

- Best Case: (balanced partitions).

- Average Case: (randomized pivots).

- Worst Case: (poor pivot choices).

- Quick Sort behaves similarly to Selection Sort in its worst-case scenario with a time complexity of .

11) Explain how multiplication of large integers can be done efficiently by usingdivide and conquer technique?

Efficient Multiplication of Large Integers Using Divide and Conquer

The multiplication of large integers can be efficiently accomplished using the divide and conquer technique, particularly through an algorithm known as Karatsuba multiplication. This method reduces the complexity of multiplying two large numbers compared to the traditional grade-school method.

Overview of Karatsuba Multiplication

Karatsuba’s algorithm works by breaking down the multiplication of two large numbers into smaller parts, allowing for fewer multiplicative operations. The key idea is to split each number into two halves and recursively multiply these halves.

Steps of Karatsuba Multiplication

-

Split the Numbers:

- Given two large integers and , split them into two halves:

- Let (where is half the number of digits in )

- Let

- Given two large integers and , split them into two halves:

-

Recursive Multiplication:

- Compute three products using the smaller parts:

- (the product of the lower parts)

- (the product of the higher parts)

- (cross-term product)

- Compute three products using the smaller parts:

-

Combine the Results:

- The final result can be computed as: This combines the results from the three multiplications.

Complexity Analysis

- The traditional multiplication algorithm has a time complexity of , where is the number of digits in the numbers being multiplied.

- Karatsuba’s algorithm reduces this to approximately . This is achieved by reducing the number of multiplicative operations needed from four (as in traditional methods) to three.

Example

Let’s illustrate with a simple example:

- Suppose we want to multiply and .

-

Split:

- Let

- Let

-

Compute:

-

Combine:

- Final result:

Conclusion

The divide and conquer technique, particularly through Karatsuba multiplication, allows for efficient multiplication of large integers by reducing the number of required multiplicative operations. This method significantly improves performance over traditional multiplication algorithms, especially for very large numbers, making it a powerful tool in computational mathematics and computer science.

12) Explain how divide and conquer method help multiplying two squareMatrices. OR Explain Strasson’s algorithm for matrix multiplication.

Multiplying Two Square Matrices Using Divide and Conquer

The divide and conquer method can significantly improve the efficiency of matrix multiplication, especially for large matrices. One of the most well-known algorithms that utilize this technique is Strassen’s Algorithm. This algorithm reduces the number of multiplications required to multiply two matrices compared to the conventional method.

Steps of the Divide and Conquer Method for Matrix Multiplication

-

Divide:

- Given two square matrices and of size , divide each matrix into four submatrices:

- For matrix :

- For matrix :

- Given two square matrices and of size , divide each matrix into four submatrices:

-

Recursive Multiplication:

- Instead of performing the standard multiplication, which requires multiplications for the four submatrices, Strassen’s algorithm reduces this to multiplications by calculating the following:

- Instead of performing the standard multiplication, which requires multiplications for the four submatrices, Strassen’s algorithm reduces this to multiplications by calculating the following:

-

Combine:

- The final result matrix can be constructed using the products calculated above:

- The submatrices of the resulting product matrix are computed as follows:

- The submatrices of the resulting product matrix are computed as follows:

- The final result matrix can be constructed using the products calculated above:

Complexity Analysis

- The traditional method for multiplying two matrices has a time complexity of .

- Strassen’s algorithm reduces this complexity to approximately , which is about . This reduction is significant for large matrices and demonstrates the power of the divide and conquer approach.

Conclusion

The divide and conquer method enhances matrix multiplication by breaking down larger problems into smaller subproblems, allowing for more efficient calculations through recursive steps. Strassen’s algorithm exemplifies how this technique can reduce computational complexity, making it a valuable approach in algorithm design for linear algebra operations.

13) Write an algorithm for quick sort and derive best case, worst case usingdivide and conquer technique also trace given data (3,1,4,5,9,2,6,5).

Quick Sort Algorithm

Quick Sort is a divide-and-conquer sorting algorithm that works by selecting a “pivot” element from the array and partitioning the other elements into two sub-arrays according to whether they are less than or greater than the pivot. The sub-arrays are then sorted recursively.

Quick Sort Algorithm

Here is a simple implementation of Quick Sort:

def quick_sort(arr):

if len(arr) <= 1:

return arr

else:

pivot = arr[len(arr) // 2] # Choosing the middle element as pivot

left = [x for x in arr if x < pivot] # Elements less than pivot

middle = [x for x in arr if x == pivot] # Elements equal to pivot

right = [x for x in arr if x > pivot] # Elements greater than pivot

return quick_sort(left) + middle + quick_sort(right)Complexity Analysis

Best Case

- The best-case scenario occurs when the pivot divides the array into two nearly equal halves at each level of recursion.

- Time Complexity:

- This happens when the pivot is chosen optimally, leading to balanced partitions.

Worst Case

- The worst-case scenario occurs when the pivot is always the smallest or largest element in the partitioned array, leading to unbalanced partitions.

- Time Complexity:

- This typically happens when the input array is already sorted (or reverse sorted) and the first or last element is consistently chosen as the pivot.

Tracing Quick Sort on Given Data

Let’s trace Quick Sort using the input data: (3, 1, 4, 5, 9, 2, 6, 5).

-

Initial Array:

- Input:

[3, 1, 4, 5, 9, 2, 6, 5] - Pivot:

4(middle element)

- Input:

-

Partitioning:

- Left:

[3, 1, 2](elements less than4) - Middle: “(elements equal to

4) - Right:

[5, 9, 6, 5](elements greater than4)

- Left:

-

Recursive Calls:

-

Sort Left:

quick_sort([3, 1, 2])- Pivot:

1 - Left:

[] - Middle:

[1] - Right:

[3, 2]- Sort Right:

quick_sort([3, 2])- Pivot:

2 - Left:

[] - Middle:

[2] - Right: “

- Pivot:

- Combined Result for Right:

[2, 3]

- Sort Right:

- Combined Result for Left:

[1] + [] + [2, 3] = [1, 2, 3]

- Pivot:

-

Sort Right:

quick_sort([5, 9, 6, 5])- Pivot:

9 - Left:

[5, 6] - Middle: “

- Right:

[]- Sort Left:

quick_sort([5, 6])- Pivot:

6 - Left: “

- Middle: “

- Right:

[]

- Pivot:

- Combined Result for Left:

+ + [] = [5, 6]

- Sort Left:

- Pivot:

-

-

Final Combination: Combine all parts:

Sorted Array = [1, 2, 3] + [4] + [5, 6] + [9] = [1, 2, 3, 4, 5, 5, 6, 9]

Conclusion

The Quick Sort algorithm efficiently sorts arrays using a divide-and-conquer approach. Its average and best-case time complexity is , while its worst-case time complexity is . The example traced above demonstrates how Quick Sort operates on a given set of data by recursively partitioning and sorting sub-arrays.

14) Write Merge sort algorithm and compute its worst case and best-case time complexity. Sort the List G,U,J,A,R,A,T in alphabetical order using merge sort.

Merge Sort Algorithm

Merge Sort is a divide-and-conquer algorithm that sorts an array by recursively dividing it into two halves, sorting each half, and then merging the sorted halves back together.

Merge Sort Algorithm

Here’s a simple implementation of Merge Sort in Python:

def merge_sort(arr):

if len(arr) > 1:

mid = len(arr) // 2 # Finding the mid of the array

left_half = arr[:mid] # Dividing the elements into 2 halves

right_half = arr[mid:]

merge_sort(left_half) # Sorting the first half

merge_sort(right_half) # Sorting the second half

i = j = k = 0

# Copy data to temp arrays L[] and R[]

while i < len(left_half) and j < len(right_half):

if left_half[i] < right_half[j]:

arr[k] = left_half[i]

i += 1

else:

arr[k] = right_half[j]

j += 1

k += 1

# Checking if any element was left

while i < len(left_half):

arr[k] = left_half[i]

i += 1

k += 1

while j < len(right_half):

arr[k] = right_half[j]

j += 1

k += 1

# Example usage:

data = ['G', 'U', 'J', 'A', 'R', 'A', 'T']

merge_sort(data)

print(data) # Output will be sorted listTime Complexity of Merge Sort

-

Best Case: The best-case time complexity occurs when the array is already sorted. However, even in this case, Merge Sort still divides and merges the array, leading to:

- Time Complexity:

-

Worst Case: The worst-case time complexity occurs when the array is in reverse order. Similar to the best case, it still divides and merges, resulting in:

- Time Complexity:

Sorting the List G, U, J, A, R, A, T Using Merge Sort

Let’s apply the Merge Sort algorithm to sort the list alphabetically.

-

Initial List:

['G', 'U', 'J', 'A', 'R', 'A', 'T'] -

Divide:

- Split into two halves:

- Left:

['G', 'U', 'J'] - Right:

['A', 'R', 'A', 'T']

- Left:

- Split into two halves:

-

Recursive Sorting:

- Sort Left:

- Split

['G', 'U', 'J']into['G']and['U', 'J'] - Sort

['U', 'J']into['J', 'U'] - Merge to get

['G', 'J', 'U']

- Split

- Sort Right:

- Split

['A', 'R', 'A', 'T']into['A', 'R']and['A', 'T'] - Both halves are sorted as they have one or two elements.

- Merge to get

['A', 'A', 'R', 'T']

- Split

- Sort Left:

-

Combine Sorted Halves:

- Merge

['G', 'J', 'U']and['A', 'A', 'R', 'T']: - Resulting Sorted List:

['A', 'A', 'G', 'J', 'R', 'T', 'U']

- Merge

Final Output

After applying Merge Sort to the list G, U, J, A, R, A, T, the sorted list in alphabetical order is:

['A', 'A', 'G', 'J', 'R', 'T', 'U']Conclusion

Merge Sort is an efficient sorting algorithm with a consistent time complexity of for best, average, and worst cases. The algorithm effectively sorts the provided list alphabetically through recursive division and merging.

15) Write divide and conquer algorithm to solve Exponential problem. Also solve29 using same algorithm.

Divide and Conquer Algorithm to Solve the Exponential Problem

The exponential problem typically refers to calculating powers of a number, such as . The divide and conquer approach can be effectively used to compute efficiently, especially for large values of .

Algorithm

The algorithm works as follows:

-

Base Case:

- If , return 1 (since any number raised to the power of 0 is 1).

- If , return (since any number raised to the power of 1 is itself).

-

Divide:

- If is even, calculate:

- If is odd, calculate:

-

Conquer:

- Recursively compute the required powers.

-

Combine:

- Combine the results from the recursive calls.

Pseudocode

Here’s the pseudocode for the divide and conquer algorithm to compute :

function power(x, n):

if n == 0:

return 1

if n == 1:

return x

if n is even:

half_power = power(x, n / 2)

return half_power * half_power

else:

return x * power(x, n - 1)Example: Calculate

Let’s solve using the above algorithm.

-

Initial Call:

power(2, 9)- Since is odd:

-

Next Call:

power(2, 8)- Since is even:

-

Next Call:

power(2, 4)- Since is even:

-

Next Call:

power(2, 2)- Since is even:

-

Next Call:

power(2, 1)- Base case reached, return .

Now we can combine the results:

- From

power(2, 1)we get . - From

power(2, 2)we compute: - From

power(2, 4)we compute: - From

power(2, 8)we compute: - Finally, from

power(2, 9)we compute:

Conclusion

Using the divide and conquer approach, we have computed that . This method is efficient and reduces the number of multiplications needed compared to a straightforward iterative approach. The time complexity of this algorithm is , making it very efficient for large values of .

16) Demonstrate Binary Search method to search Key = 14, form the array A=<2,4,7,8,10,13,14,60>

Demonstrating Binary Search Method

To demonstrate the Binary Search method for searching a key in a sorted array, we will use the provided array and search for the key .

Steps of Binary Search

-

Initial Setup:

- Define the initial boundaries:

left = 0(the index of the first element)right = len(A) - 1(the index of the last element)

- Define the initial boundaries:

-

Iterative Process:

- While

leftis less than or equal toright, do the following:- Calculate the middle index:

- Compare the middle element with the key:

- If , return the index (key found).

- If , adjust the left boundary:

- If , adjust the right boundary:

- While

-

Key Not Found:

- If the loop exits without finding the key, return an indication that the key is not present (e.g., return -1).

Example: Searching for Key = 14

Let’s trace through the binary search algorithm step by step:

- Initial Array:

- Key to Search:

Iteration Steps

-

First Iteration:

left = 0,right = 7- Calculate

mid: - Compare with :

- Since , update

left:

- Since , update

-

Second Iteration:

left = 4,right = 7- Calculate

mid: - Compare with :

- Since , update

left:

- Since , update

-

Third Iteration:

left = 6,right = 7- Calculate

mid: - Compare with :

- Since , we have found the key at index 6.

Conclusion

The Binary Search method successfully found the key at index 6 in the array .

This method is efficient with a time complexity of , making it suitable for searching in sorted arrays.

17) Apply merge sort algorithm on array A = {2,7,3,5,1,9,4,8}. What is time complexity of merge sort in worst case?

Applying Merge Sort on Array A = {2, 7, 3, 5, 1, 9, 4, 8}

Merge Sort is a divide-and-conquer algorithm that sorts an array by recursively dividing it into two halves, sorting each half, and then merging the sorted halves back together.

Steps of Merge Sort

-

Initial Array:

-

Divide:

- Split the array into two halves:

-

Recursive Sorting:

- Sort the left half :

- Split into and

- Sort :

- Already sorted.

- Sort :

- Already sorted.

- Merge and :

- Result:

- Sort the right half :

- Split into and

- Sort :

- Already sorted.

- Sort :

- Already sorted.

- Merge and :

- Result:

- Sort the left half :

-

Combine Sorted Halves:

- Merge the two sorted halves:

- Merging and

- Resulting Sorted Array:

Time Complexity of Merge Sort

- The time complexity of Merge Sort in the worst case is:

This complexity arises because the array is divided in half at each level of recursion (which contributes to the logarithmic factor), and merging takes linear time (which contributes to the linear factor). Thus for an array of size , the overall complexity is .

Conclusion

The sorted array after applying Merge Sort on is , and the worst-case time complexity of Merge Sort is .

18) Multiply 981 by 1234 by divide and conquer method.

To multiply two large integers using the divide and conquer method, we can use Karatsuba’s algorithm, which is an efficient way to perform multiplication by reducing the number of multiplicative operations required.

Steps to Multiply 981 by 1234 Using Karatsuba’s Algorithm

-

Split the Numbers:

- Let and .

- We can split these numbers into two halves:

- For :

- (the first digit)

- (the remaining digits)

- For :

- (the first two digits)

- (the last two digits)

- For :

-

Calculate the Products: Using the Karatsuba formula:

where is the number of digits in the smaller half.

Here, we have:

- (since we are working with two-digit numbers)

Now we compute:

- Calculate

- Calculate

- Calculate

-

Performing the Calculations:

-

Calculate :

-

Calculate :

-

Calculate : First, calculate:

Now compute:

Calculate : Then,

-

-

Combine the Results: Now substitute back into the Karatsuba formula:

Substituting the values we found:

- : Calculating each term:

- Adding them together: Calculating the total:

Conclusion

Using the divide and conquer method with Karatsuba’s algorithm, we find that:

This efficient multiplication method reduces the number of operations needed compared to traditional multiplication methods.

19) Sort the following list using quick sort algorithm:< 5, 3 ,8 ,1 ,4 ,6 ,2 ,7 > Alsowrite Worst and Best case and Average case of quick sort algorithm.

Sorting the List Using Quick Sort Algorithm

To sort the list < 5, 3, 8, 1, 4, 6, 2, 7 > using the Quick Sort algorithm, we will follow these steps:

- Choose a Pivot: Select an element from the array as the pivot. A common choice is to select the last element.

- Partitioning: Rearrange the array so that elements less than the pivot come before it and elements greater than the pivot come after it.

- Recursively Apply: Recursively apply the above steps to the sub-arrays of elements with smaller and larger values.

Quick Sort Implementation

Here’s how Quick Sort would work on the given list:

-

Initial List:

[5, 3, 8, 1, 4, 6, 2, 7] -

First Call:

- Choose pivot:

7 - Partitioning:

- Elements less than

7:[5, 3, 1, 4, 6, 2] - Elements equal to

7: “ - Elements greater than

7: “

- Elements less than

- Combined:

[5, 3, 1, 4, 6, 2] + [7] + [8] - Choose pivot:

-

Recursive Call on Left Sub-array:

[5, 3, 1, 4, 6, 2]- Choose pivot:

2 - Partitioning:

- Less than

2:[] - Equal to

2:[2] - Greater than

2:[5, 3, 1, 4, 6]

- Less than

- Combined:

[] + [2] + [5, 3, 1, 4, 6] - Choose pivot:

-

Recursive Call on Right Sub-array:

[5, 3, 1, 4, 6]- Choose pivot:

6 - Partitioning:

- Less than

6:[5, 3, 1, 4] - Equal to

6: “ - Greater than

6:[]

- Less than

- Combined:

[5, 3, 1, 4] + [6] + [] - Choose pivot:

-

Continue Recursion:

-

For

[5, 3, 1, 4], choose pivot4:- Less than

4:[3,1] - Equal to

4: “ - Greater than

4: “ - Combined:

[3 ,1] + [4] + [5] - Less than

-

For

[3 ,1], choose pivot1:- Less than

1:[] - Equal to

1:[1] - Greater than

1: “ - Combined:

[] + [1] + [3] - Less than

-

Now combine all parts:

[1] + [2] + [3] + [4] + [5] + [6] + [7] + [8]Final Sorted List

The sorted list is:

[1 ,2 ,3 ,4 ,5 ,6 ,7 ,8]Time Complexity of Quick Sort

-

Best Case: The best-case time complexity occurs when the pivot divides the array into two equal halves at each level of recursion.

- Time Complexity:

-

Average Case: The average-case time complexity also results in balanced partitions on average.

- Time Complexity:

-

Worst Case: The worst-case time complexity occurs when the smallest or largest element is consistently chosen as the pivot (e.g., when the array is already sorted or reverse sorted).

- Time Complexity:

Summary

The Quick Sort algorithm efficiently sorts the list <5, 3 ,8 ,1 ,4 ,6 ,2 ,7> into <1 ,2 ,3 ,4 ,5 ,6 ,7 ,8>. Its time complexity varies based on the choice of pivot and input arrangement but generally operates in for best and average cases and for the worst case.

20) What is the basic nature of greedy strategy? Give the characteristics of Greedy Algorithms.

Basic Nature of Greedy Strategy

The greedy strategy is an algorithmic approach that builds up a solution piece by piece, always choosing the next piece that offers the most immediate benefit or the best local solution. The greedy method aims to find a global optimum by selecting the best option available at each step without considering the broader consequences.

In essence, greedy algorithms make a sequence of choices, each of which looks best at the moment, with the hope that these local optimum choices will lead to a global optimum.

Characteristics of Greedy Algorithms

-

Local Optimal Choice:

- Greedy algorithms make decisions based on the best option available at that moment, without considering future consequences. This means they focus on immediate benefits.

-

Feasibility:

- Each choice made must be feasible; that is, it must satisfy the problem’s constraints and contribute to a valid solution.

-

Irrevocability:

- Once a choice is made, it cannot be undone. This means that the algorithm does not backtrack to reconsider previous decisions.

-

Optimal Substructure:

- A problem exhibits optimal substructure if an optimal solution to the problem contains optimal solutions to its subproblems. Greedy algorithms often rely on this property to ensure that local choices lead to a global optimum.

-

Efficiency:

- Greedy algorithms are typically more efficient than other approaches (like dynamic programming) because they do not require extensive computation or storage of intermediate results.

-

Simplicity:

- Greedy algorithms are generally easier to implement and understand due to their straightforward decision-making process.

Conclusion

Greedy algorithms are powerful tools for solving optimization problems where local choices can lead to a globally optimal solution. However, it is essential to analyze whether a greedy approach will yield an optimal solution for a specific problem, as it does not guarantee optimality in all cases.

21) Mention applications of minimum spanning tree.

Applications of Minimum Spanning Tree (MST)

Minimum Spanning Trees (MST) have various applications across different fields. Here are some notable applications:

-

Network Design:

- MST is used in designing network layouts, such as computer networks, telecommunications, and transportation networks. The goal is to connect all points (nodes) with the minimum total edge (cost) while ensuring no cycles.

-

Cluster Analysis:

- In data analysis, MST can be applied to cluster data points. By treating data points as vertices and distances as edges, MST helps identify clusters by connecting closely related points.

-

Approximation Algorithms:

- MST is often used in approximation algorithms for complex problems, such as the Traveling Salesman Problem (TSP). By finding an MST, one can create a tour that approximates the optimal solution.

-

Image Processing:

- In image segmentation, MST can help partition an image into segments by treating pixels as nodes and pixel similarity as edges. This allows for efficient processing and analysis of images.

-

Supply Chain Management:

- MST can optimize the layout of supply chains by minimizing transportation costs between suppliers, warehouses, and customers.

-

Road Construction:

- When planning road networks, MST can help minimize the cost of connecting various locations while ensuring that all areas are accessible.

-

Electrical Grid Design:

- In electrical engineering, MST helps design efficient power distribution networks by connecting substations and consumers with minimal wiring cost.

-

Telecommunication Networks:

- For designing efficient communication networks like fiber optics or wireless networks, MST ensures that all stations are connected with minimal cable length or signal loss.

-

Game Development:

- In computer graphics and game development, MST can be used for rendering scenes efficiently by minimizing the number of edges in a graph representing a scene.

These applications highlight the versatility of Minimum Spanning Trees in solving real-world problems across various domains, emphasizing their importance in optimization and network design.

22) Suppose that we have a set of activities to schedule among a large number of lecture halls, where any activity can take place in any lecture hall. We wish to schedule all the activities using as few lecture halls as possible. Give an efficient greedy algorithm to determine which activity should use which lecture hall.

To efficiently schedule a set of activities in lecture halls using a greedy algorithm, we can utilize a technique similar to the Activity Selection Problem. The goal is to assign activities to the minimum number of lecture halls while ensuring that no two activities overlap in the same hall.

Greedy Algorithm for Activity Scheduling

-

Sort Activities:

- First, sort the activities based on their start times (or end times, depending on your preference). This helps in efficiently determining which activities can be scheduled together.

-

Initialize Data Structures:

- Use a priority queue or a list to keep track of the end times of the activities currently assigned to each lecture hall.

-

Iterate Through Activities:

- For each activity, check if it can be assigned to an existing lecture hall:

- If the activity starts after the earliest ending activity in the priority queue (or list), assign it to that hall and update the end time.

- If not, allocate a new lecture hall for this activity.

- For each activity, check if it can be assigned to an existing lecture hall:

-

Output:

- Return the assignment of activities to lecture halls.

Pseudocode

Here’s a simple pseudocode representation of the algorithm:

function scheduleActivities(activities):

// Sort activities by start time

sort(activities by start time)

// Initialize a list to keep track of end times of assigned activities

endTimes = []

// Assign each activity to a lecture hall

for each activity in activities:

// Check if this activity can be assigned to an existing hall

if endTimes is not empty and activity.start >= endTimes[0]:

// Reassign the hall (remove the earliest ending time)

pop endTimes[0]

// Add this activity's end time to the list

endTimes.append(activity.end)

// The number of lecture halls used is equal to the size of endTimes

return length(endTimes)Example

Let’s consider an example set of activities with their start and end times:

- Activity 1: (1, 3)

- Activity 2: (2, 4)

- Activity 3: (3, 5)

- Activity 4: (0, 6)

- Activity 5: (5, 7)

- Activity 6: (3, 9)

- Activity 7: (5, 9)

Steps:

-

Sort Activities by start time:

[(0, 6), (1, 3), (2, 4), (3, 5), (3, 9), (5, 7), (5, 9)] -

Assign Activities:

- Assign Activity 1 (1,3) to Hall A.

- Assign Activity 2 (2,4) to Hall B.

- Assign Activity 3 (3,5) to Hall A.

- Assign Activity 4 (0,6) to Hall C.

- Assign Activity 5 (5,7) to Hall A.

- Assign Activity 6 (3,9) to Hall D.

- Assign Activity 7 (5,9) will go into Hall E.

-

Result:

- The algorithm will yield a schedule that uses multiple halls efficiently based on overlapping times.

Time Complexity

- The time complexity of this greedy algorithm is due to sorting the activities initially and for iterating through them. Thus, the overall complexity is dominated by the sorting step.

Conclusion

This greedy algorithm effectively assigns activities to lecture halls while minimizing the number of halls used. By always choosing the earliest finishing activity that can fit into a hall, we ensure efficient scheduling.

23) Write greedy algorithm for activity selection problem. Give its time complexity. For following intervals, select the activities according to your algorithm. I1 (1-3), I2 (0- 2), I3 (3-6), I4 (2-5), I5 (5-8), I6 (3-10), I6(7-9).

Greedy Algorithm for Activity Selection Problem

The Activity Selection Problem involves selecting the maximum number of activities that don’t overlap, given their start and finish times. The greedy approach to solve this problem is to always select the next activity that finishes the earliest and is compatible with the previously selected activities.

Steps of the Greedy Algorithm

- Sort Activities: Sort all activities based on their finish times.

- Select Activities:

- Initialize an empty list to hold the selected activities.

- Select the first activity from the sorted list and add it to the selected activities.

- For each subsequent activity, if its start time is greater than or equal to the finish time of the last selected activity, select it.

Pseudocode

function activitySelection(activities):

// Sort activities by finish time

sort(activities by finish time)

// Select the first activity

selectedActivities = []

selectedActivities.append(activities[0])

// The end time of the last selected activity

lastFinishTime = activities[0].finish

for i from 1 to length(activities) - 1:

if activities[i].start >= lastFinishTime:

selectedActivities.append(activities[i])

lastFinishTime = activities[i].finish

return selectedActivitiesTime Complexity

- The time complexity of this algorithm is dominated by the sorting step, which is , where is the number of activities. The selection process itself takes , so the overall time complexity remains .

Selecting Activities from Given Intervals

Given intervals:

- I1: (1, 3)

- I2: (0, 2)

- I3: (3, 6)

- I4: (2, 5)

- I5: (5, 8)

- I6: (3, 10)

- I7: (7, 9)

Step-by-Step Selection Process

-

Sort Activities by Finish Time:

- Sorted intervals based on finish times:

- I2: (0, 2)

- I1: (1, 3)

- I4: (2, 5)

- I3: (3, 6)

- I6: (3, 10)

- I5: (5, 8)

- I7: (7, 9)

- Sorted intervals based on finish times:

-

Select Activities:

- Start with an empty list of selected activities.

- Select I2 (0, 2) as it finishes first.

- Next activity is I1 (1, 3), but it overlaps with I2; skip it.

- Select I4 (2, 5) as it starts after I2 finishes.

- Next is I3 (3, 6); select it as it starts after I4 finishes.

- Skip I6 (3, 10) because it overlaps with I3.

- Select I5 (5, 8) as it starts after I3 finishes.

- Finally, select I7 (7, 9) as it starts after I5 finishes.

Final Selected Activities

The selected activities are:

- I2: (0, 2)

- I4: (2, 5)

- I3: (3, 6)

- I5: (5, 8)

- I7: (7, 9)

Conclusion

The greedy algorithm for the Activity Selection Problem efficiently selects non-overlapping activities based on their finish times. The time complexity of this algorithm is , making it suitable for practical applications in scheduling and resource allocation.

24) Give and Explain the Prim’s Algorithm to find out Minimum Spanning Treewith illustration.

Prim’s Algorithm for Minimum Spanning Tree

Prim’s Algorithm is a greedy algorithm that finds a minimum spanning tree (MST) for a weighted undirected graph. The algorithm builds the MST by starting from an arbitrary vertex and growing the tree one edge at a time, always choosing the smallest edge that connects a vertex in the tree to a vertex outside the tree.

Steps of Prim’s Algorithm

-

Initialization:

- Start with an arbitrary vertex as the initial MST.

- Create an empty set to hold the vertices included in the MST.

- Create a priority queue (or min-heap) to keep track of edges based on their weights.

-

Add Vertices:

- Add the initial vertex to the MST set.

- Add all edges from this vertex to the priority queue.

-

Build the MST:

- While there are still vertices not included in the MST:

- Extract the edge with the minimum weight from the priority queue.

- If it connects a vertex in the MST to a vertex outside, add this edge and the new vertex to the MST set.

- Add all edges from this new vertex to the priority queue that connect to vertices not yet in the MST.

- While there are still vertices not included in the MST:

-

Repeat until all vertices are included in the MST.

Pseudocode

function prim(graph, start_vertex):

mst_set = {} // Set of vertices in the MST

edges = priority_queue() // Min-heap for edges

total_weight = 0

// Initialize with starting vertex

mst_set.add(start_vertex)

for each edge in graph.edges[start_vertex]:

edges.push(edge)

while mst_set.size < graph.vertex_count:

min_edge = edges.pop() // Get edge with minimum weight

if min_edge.destination not in mst_set:

mst_set.add(min_edge.destination)

total_weight += min_edge.weight

// Add new edges to priority queue

for each edge in graph.edges[min_edge.destination]:

if edge.destination not in mst_set:

edges.push(edge)

return total_weight, mst_setIllustration of Prim’s Algorithm

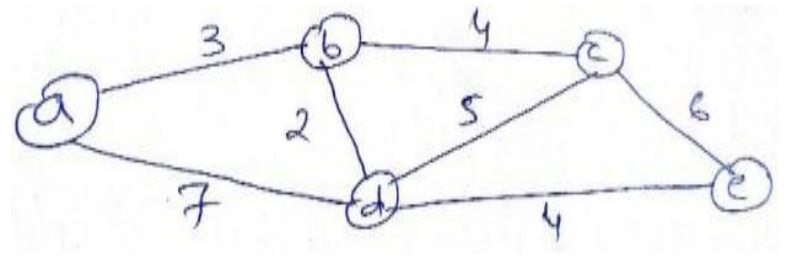

Let’s illustrate Prim’s Algorithm with an example graph:

- Graph Representation:

2

(A)------(B)

| \ |

3| \1 |4

| \ |

(C)------(D)

5- Vertices: A, B, C, D

- Edges with Weights:

- A-B: 2

- A-C: 3

- A-D: 1

- B-D: 4

- C-D: 5

- Steps of Prim’s Algorithm:

-

Step 1: Start from vertex A.

-

MST Set: {A}

-

Priority Queue: [(A-D, 1), (A-B, 2), (A-C, 3)]

-

Step 2: Select edge A-D (weight = 1).

-

MST Set: {A, D}

-

Total Weight: 1

-

Priority Queue: [(A-B, 2), (A-C, 3), (B-D, 4), (C-D, 5)]

-

Step 3: Select edge A-B (weight = 2).

-

MST Set: {A, B, D}

-

Total Weight: 3

-

Priority Queue: [(A-C, 3), (B-D, 4), (C-D, 5)]

-

Step 4: Select edge A-C (weight = 3).

-

MST Set: {A, B, C, D}

-

Total Weight: 6

-

Priority Queue is empty now.

Final Result

The Minimum Spanning Tree consists of edges:

- A-D (weight = 1)

- A-B (weight = 2)

- A-C (weight = 3)

The total weight of the MST is 6, and it connects all vertices with minimal total edge weight.

Time Complexity

The time complexity of Prim’s algorithm depends on how you implement it:

- Using an adjacency matrix and simple array for priority queue:

- Time Complexity: where is the number of vertices.

- Using an adjacency list and a binary heap for priority queue:

- Time Complexity: where is the number of edges and is the number of vertices.

Conclusion

Prim’s Algorithm is an efficient method for finding a Minimum Spanning Tree in a weighted undirected graph. It systematically builds up the MST by selecting edges based on their weights and ensures that all vertices are connected with minimal total weight.

25) Give and Explain the Kruskal’s Algorithm to find out Minimum Spanning Treewith illustration.

Kruskal’s Algorithm for Minimum Spanning Tree

Kruskal’s Algorithm is a greedy algorithm that finds a minimum spanning tree (MST) for a connected, weighted graph. The algorithm works by sorting all the edges in non-decreasing order of their weights and adding them one by one to the MST, ensuring that no cycles are formed.

Steps of Kruskal’s Algorithm

-

Sort Edges:

- Begin by sorting all the edges of the graph in ascending order based on their weights.

-

Initialize MST:

- Create an empty set to hold the edges of the MST.

-

Union-Find Data Structure:

- Use a union-find (disjoint-set) data structure to keep track of which vertices are in which components. This helps in detecting cycles efficiently.

-

Process Edges:

- Iterate through the sorted edge list and for each edge:

- Check if adding this edge would form a cycle using the union-find structure.

- If it does not form a cycle, add it to the MST and perform a union operation to merge the two sets.

- If it does form a cycle, skip this edge.

- Iterate through the sorted edge list and for each edge:

-

Repeat until there are edges in the MST, where is the number of vertices in the graph.

Pseudocode

function kruskal(graph):

mst = [] // List to store the edges of the minimum spanning tree

edges = sort(graph.edges) // Sort edges by weight

uf = createUnionFind(graph.vertices) // Initialize union-find structure

for each edge in edges:

u, v = edge.start, edge.end

if uf.find(u) != uf.find(v): // Check if u and v are in different components

mst.append(edge) // Add edge to MST

uf.union(u, v) // Union the two vertices

return mstIllustration of Kruskal’s Algorithm

Let’s illustrate Kruskal’s Algorithm with an example graph:

- Graph Representation:

1

(A)------(B)

| \ |

3| \4 |2

| \ |

(C)------(D)

5- Vertices: A, B, C, D

- Edges with Weights:

- A-B: 1

- A-C: 3

- A-D: 4

- B-D: 2

- C-D: 5

- Steps of Kruskal’s Algorithm:

- Step 1: Sort all edges by weight:

1. A-B (1)

2. B-D (2)

3. A-C (3)

4. A-D (4)

5. C-D (5)-

Step 2: Initialize an empty MST and union-find structure.

-

Step 3: Process each edge in sorted order:

-

Add edge A-B (1):

- No cycle is formed; add to MST.

- MST now contains: {A-B}.

-

Add edge B-D (2):

- No cycle is formed; add to MST.

- MST now contains: {A-B, B-D}.

-

Add edge A-C (3):

- No cycle is formed; add to MST.

- MST now contains: {A-B, B-D, A-C}.

-

Add edge A-D (4):

- Adding this would create a cycle with vertices A, B, and D.

- Skip this edge.

-

Add edge C-D (5):

- Adding this would also create a cycle.

- Skip this edge.

-

- Final Result: The edges included in the Minimum Spanning Tree are:

- A-B (1)

- B-D (2)

- A-C (3)

Final Minimum Spanning Tree

The resulting Minimum Spanning Tree includes the edges:

A-B (1)

B-D (2)

A-C (3)Time Complexity

- The time complexity of Kruskal’s algorithm is dominated by two factors:

- Sorting the edges takes , where is the number of edges.

- The union-find operations can be performed in nearly constant time , where is the number of vertices and is the inverse Ackermann function.

Thus, the overall time complexity is:

Conclusion

Kruskal’s Algorithm efficiently finds a Minimum Spanning Tree for a weighted undirected graph by sorting edges and using a union-find structure to avoid cycles. It is particularly useful for sparse graphs where is much less than .

26) Define spanning tree and MST. How Krushkal’s algorithm is different fromPrim’s algorithm.

Definitions

Spanning Tree: A spanning tree of a connected, undirected graph is a subgraph that includes all the vertices of the original graph and is a tree (i.e., it has no cycles). A spanning tree connects all the vertices together without any cycles and with the minimum possible number of edges, which is for a graph with vertices.

Minimum Spanning Tree (MST): A Minimum Spanning Tree is a spanning tree that has the smallest possible total edge weight among all spanning trees of a graph. In other words, it connects all the vertices with the least total edge weight while still maintaining the properties of a tree.

Differences Between Kruskal’s Algorithm and Prim’s Algorithm

Both Kruskal’s Algorithm and Prim’s Algorithm are greedy algorithms used to find the Minimum Spanning Tree of a graph, but they differ in their approach:

| Feature | Kruskal’s Algorithm | Prim’s Algorithm |

|---|---|---|

| Approach | Works by sorting all edges and adding them one by one to the MST if they do not form a cycle. | Starts from an arbitrary vertex and grows the MST by adding edges that connect to the growing set of vertices. |

| Edge Selection | Considers edges in sorted order based on their weights. | Selects the minimum weight edge that connects a vertex in the MST to a vertex outside of it. |

| Data Structure | Uses a disjoint-set (union-find) data structure to keep track of connected components and detect cycles. | Typically uses a priority queue or min-heap to efficiently get the next minimum edge. |

| Graph Type | Can work on both connected and disconnected graphs (producing a forest). | Only works on connected graphs; if applied to disconnected graphs, it will not produce a valid MST. |

| Time Complexity | due to sorting edges, where is the number of edges. | , where is the number of vertices, especially when using binary heaps for priority queues. |

Conclusion

In summary, both algorithms are effective for finding Minimum Spanning Trees but are suited to different situations based on how they process edges and their underlying data structures. Kruskal’s focuses on edges first, while Prim’s focuses on expanding from vertices.

27) Design and explain Dijkstra’s shortest path algorithm.

Dijkstra’s Shortest Path Algorithm

Dijkstra’s Algorithm is a popular algorithm used to find the shortest path from a source vertex to all other vertices in a weighted graph with non-negative edge weights. It is particularly useful in various applications, such as routing and navigation systems.

Steps of Dijkstra’s Algorithm

-

Initialization:

- Set the distance to the source vertex to 0 and the distance to all other vertices to infinity.

- Create a priority queue (or min-heap) to store vertices based on their current shortest distance from the source.

- Add the source vertex to the priority queue.

-

Main Loop:

- While the priority queue is not empty:

- Extract the vertex with the smallest distance (let’s call it

current). - For each neighbor of

current, calculate the potential new distance throughcurrent: - If

new_distanceis less than the currently known distance to that neighbor, update the neighbor’s distance and add it to the priority queue.

- Extract the vertex with the smallest distance (let’s call it

- While the priority queue is not empty:

-

Termination:

- The algorithm terminates when all vertices have been processed, and the shortest distances from the source vertex to all other vertices are finalized.

Pseudocode

function dijkstra(graph, source):

// Initialize distances and priority queue

distances = {}

for each vertex in graph:

distances[vertex] = infinity

distances[source] = 0

priority_queue = min-heap()

priority_queue.push(source, 0) // Push source with distance 0

while not priority_queue.is_empty():

current = priority_queue.pop() // Get vertex with smallest distance

for each neighbor in graph[current]:

new_distance = distances[current] + weight(current, neighbor)

if new_distance < distances[neighbor]:

distances[neighbor] = new_distance

priority_queue.push(neighbor, new_distance)

return distancesExample Illustration

Let’s illustrate Dijkstra’s algorithm with a simple example graph:

(2)

A -------- B

| \ /|

(1)| (4) (3)|

| \ / |

C ---- D |

(5) |

|

E- Vertices: A, B, C, D, E

- Edges with Weights:

- A-B: 2

- A-C: 1

- B-D: 3

- C-D: 4

- C-E: 5

- D-E: 1

Steps of Dijkstra’s Algorithm on this Graph

-

Initialization:

- Distances:

- A: 0 (source)

- B: ∞

- C: ∞

- D: ∞

- E: ∞

- Distances:

-

Processing:

- Start with A:

- Update neighbors B and C:

- Distance to B = 0 + 2 = 2 → Update B’s distance.

- Distance to C = 0 + 1 = 1 → Update C’s distance.

- Update neighbors B and C:

- Distances now:

- A: 0, B: 2, C: 1, D: ∞, E: ∞

- Start with A:

-

Next Vertex (C):

- Current vertex is C (smallest distance):

- Update neighbors D and E:

- Distance to D = 1 + 4 = 5 → Update D’s distance.

- Distance to E = 1 + 5 = 6 → Update E’s distance.

- Update neighbors D and E:

- Distances now:

- A: 0, B: 2, C: 1, D: 5, E: 6

- Current vertex is C (smallest distance):

-

Next Vertex (B):

- Current vertex is B:

- Update neighbor D:

- Distance to D = 2 + 3 = 5 → No update needed since it’s already at its minimum.

- Update neighbor D:

- Distances remain:

- A: 0, B: 2, C: 1, D: 5, E: 6

- Current vertex is B:

-

Next Vertex (D):

- Current vertex is D:

- Update neighbor E:

- Distance to E = 5 + 1 = 6 → No update needed.

- Update neighbor E:

- Distances remain:

- A: 0, B: 2, C: 1, D: 5, E: 6

- Current vertex is D:

-

Final Vertex (E):

- Current vertex is E; no further updates possible.

Final Result

The shortest path distances from vertex A are:

- To A: 0

- To B: 2

- To C: 1

- To D: 5

- To E: 6

Time Complexity

The time complexity of Dijkstra’s algorithm depends on how you implement the priority queue:

- Using an adjacency matrix and a simple array for the priority queue results in a time complexity of , where is the number of vertices.

- Using an adjacency list along with a binary heap or Fibonacci heap can reduce this to , where is the number of edges.

Conclusion

Dijkstra’s algorithm efficiently finds the shortest paths from a source vertex to all other vertices in a weighted graph with non-negative weights. Its greedy nature ensures that at each step it makes the best local choice by selecting the closest unvisited vertex.

28) What are the differences between greedy approach and dynamic programming approach? Given n jobs J1 , J2, ….Jn having execution deadlines d1 , d2, …, dn. Design an algorithm using greedy approach to schedule these jobs as per earliest deadline first.

Differences Between Greedy Approach and Dynamic Programming Approach

| Feature | Greedy Approach | Dynamic Programming |

|---|---|---|

| Strategy | Makes the best local choice at each step without reconsidering previous choices. | Breaks the problem into overlapping subproblems and solves each subproblem only once, storing the results. |

| Optimality | Does not guarantee an optimal solution for all problems; works well for specific problems where local choices lead to a global optimum. | Guarantees an optimal solution by considering all possible solutions to subproblems. |

| Problem Structure | Suitable for problems with the greedy choice property and optimal substructure. | Suitable for problems with optimal substructure and overlapping subproblems. |

| Efficiency | Generally faster due to fewer computations; often runs in linear or logarithmic time. | Typically slower due to storing results and solving overlapping subproblems; may run in polynomial time. |

| Implementation Complexity | Usually simpler and easier to implement. | More complex due to the need for memoization or tabulation. |

Greedy Algorithm for Job Scheduling (Earliest Deadline First)

To schedule jobs based on their deadlines using the greedy approach, we can implement an Earliest Deadline First (EDF) algorithm. The idea is to sort the jobs by their deadlines and then schedule them in that order.

Steps of the Algorithm

- Input Jobs: Each job has a deadline associated with it.

- Sort Jobs: Sort the jobs based on their deadlines in ascending order.

- Schedule Jobs: Iterate through the sorted list of jobs and schedule them as long as they can be completed before their respective deadlines.

Pseudocode

function scheduleJobs(jobs):

// Sort jobs based on their deadlines

sort(jobs by deadline

scheduled_jobs = [] // List to store scheduled jobs

current_time = 0 // Initialize current time

for each job in jobs:

if current_time < job.deadline: // If job can be completed before its deadline

scheduled_jobs.append(job) // Schedule the job

current_time += job.execution_time // Update current time

return scheduled_jobsExample

Let’s illustrate this with an example:

- Jobs with Deadlines:

- Job J1: Execution Time = 2, Deadline = 4

- Job J2: Execution Time = 1, Deadline = 2

- Job J3: Execution Time = 3, Deadline = 5

- Job J4: Execution Time = 1, Deadline = 3

Step-by-Step Execution

-

Input Jobs:

Jobs: [(J1, 4), (J2, 2), (J3, 5), (J4, 3)] -

Sort Jobs by Deadline:

Sorted Jobs: [(J2, 2), (J4, 3), (J1, 4), (J3, 5)] -

Schedule Jobs:

- Initialize

current_time = 0 - Schedule J2 (Execution Time = 1):

- Current Time = 0 + 1 = 1 (within deadline)

- Schedule J4 (Execution Time = 1):

- Current Time = 1 + 1 = 2 (within deadline)

- Schedule J1 (Execution Time = 2):

- Current Time = 2 + 2 = 4 (within deadline)

- J3 cannot be scheduled as it would require current_time + execution_time > deadline.

- Initialize

Final Scheduled Jobs

The scheduled jobs are:

- J2

- J4

- J1

Conclusion

The greedy algorithm for scheduling jobs based on the earliest deadline is efficient and straightforward. It sorts the jobs by their deadlines and schedules them accordingly as long as they can be completed before their respective deadlines. This method is particularly effective when dealing with scheduling problems where minimizing lateness or meeting deadlines is crucial.

29) Using greedy algorithm find an optimal solution for knapsack instance n=7,M = 15 (P1, P2, P3, P4, P5, P6, P7) = (10, 5, 15, 7, 6, 18, 3) and (w1, w2, w3, w4, w5, w6, w7) = (2, 3, 5, 7, 1, 4, 1).

To solve the knapsack problem using a greedy algorithm, we will focus on the fractional knapsack variant, where we can take fractions of items. The greedy approach is optimal for this version of the problem.

Problem Definition

Given:

- Number of items

- Maximum weight capacity of the knapsack

- Profits

- Weights

Steps to Solve Using Greedy Algorithm

-

Calculate Value-to-Weight Ratio: For each item, calculate the ratio of profit to weight:

-

Sort Items: Sort the items based on their value-to-weight ratio in descending order.

-

Select Items: Iterate through the sorted list and add items to the knapsack until it reaches its capacity:

- If the entire item can be added without exceeding capacity, add it completely.

- If not, add as much of the item as possible (i.e., take a fraction).

Implementation

Step 1: Calculate Value-to-Weight Ratios

| Item | Profit (P) | Weight (W) | Ratio |

|---|---|---|---|

| P1 | 10 | 2 | 5.0 |

| P2 | 5 | 3 | 1.67 |

| P3 | 15 | 5 | 3.0 |

| P4 | 7 | 7 | 1.0 |

| P5 | 6 | 1 | 6.0 |

| P6 | 18 | 4 | 4.5 |

| P7 | 3 | 1 | 3.0 |

Step 2: Sort Items by Ratio

Sorted items based on their value-to-weight ratio:

- P5: (6, 1) → Ratio: 6.0

- P1: (10, 2) → Ratio: 5.0

- P6: (18, 4) → Ratio: 4.5

- P3: (15, 5) → Ratio: 3.0

- P7: (3, 1) → Ratio: 3.0

- P2: (5, 3) → Ratio: 1.67

- P4: (7, 7) → Ratio: 1.0

Step 3: Select Items

Now we will select items based on the sorted list until we reach the maximum weight capacity :

-

Add Item P5:

- Weight = , Profit =

- Total Weight = , Total Profit =

-

Add Item P1:

- Weight = , Profit =

- Total Weight = , Total Profit =

-

Add Item P6:

- Weight = , Profit =

- Total Weight = , Total Profit =

-

Add Item P3:

- Weight = , Profit =

- Total Weight = , Total Profit =

-

Add Item P7:

- Weight = , Profit =

- Total Weight = , Total Profit =

-

Add Item P2:

- Weight = , Profit =

- Total Weight would be which exceeds capacity.

Since adding item P2 would exceed the capacity of the knapsack, we will not add it.

Final Result

The optimal selection of items for maximum profit without exceeding the weight limit is:

- Selected Items: P5, P1, P6, P3, and P7.

- Total Weight: 13

- Total Profit: 52

Conclusion

Using a greedy algorithm based on the value-to-weight ratio allows us to efficiently determine an optimal solution for this fractional knapsack problem instance with maximum profit while respecting the weight constraints.

30) Consider Kanpsack capacity W=50, w=(10,20,40) and v=(60,80,100) find the maximum profit using greedy approach.

To solve the knapsack problem with a capacity of using a greedy approach, we need to consider the value-to-weight ratio for each item. The greedy approach is optimal for the fractional knapsack variant but may not yield an optimal solution for the 0/1 knapsack problem. However, we will proceed with the greedy method as requested.

Given Data

- Weights:

- Values:

Step 1: Calculate Value-to-Weight Ratios

We’ll calculate the value-to-weight ratio for each item:

| Item | Weight () | Value () | Value-to-Weight Ratio () |

|---|---|---|---|

| 1 | 10 | 60 | |

| 2 | 20 | 80 | |

| 3 | 40 | 100 |

Step 2: Sort Items by Value-to-Weight Ratio

Now we will sort the items based on their value-to-weight ratios in descending order:

- Item 1: Ratio =

- Item 2: Ratio =

- Item 3: Ratio =

Step 3: Select Items Using Greedy Approach

We will select items based on the sorted list until we reach the knapsack capacity of :

-

Add Item 1:

- Weight = , Value =

- Total Weight = , Total Value =

-

Add Item 2:

- Weight = , Value =

- Total Weight = , Total Value =

-

Add Item 3:

- Weight = , Value =

- Adding this item would exceed the capacity since:

- Current Total Weight + Item Weight =